7. Sparse Kernel Machines¶

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

from prml.kernel import (

RBF,

PolynomialKernel,

SupportVectorClassifier,

RelevanceVectorRegressor,

RelevanceVectorClassifier

)

np.random.seed(1234)

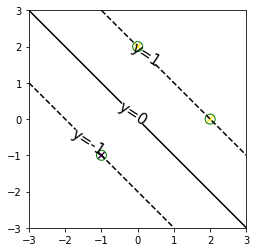

7.1 Maximum Margin Classifiers¶

x_train = np.array([

[0., 2.],

[2., 0.],

[-1., -1.]])

y_train = np.array([1., 1., -1.])

model = SupportVectorClassifier(PolynomialKernel(degree=1))

model.fit(x_train, y_train)

x0, x1 = np.meshgrid(np.linspace(-3, 3, 100), np.linspace(-3, 3, 100))

x = np.array([x0, x1]).reshape(2, -1).T

plt.scatter(x_train[:, 0], x_train[:, 1], s=40, c=y_train, marker="x")

plt.scatter(model.X[:, 0], model.X[:, 1], s=100, facecolor="none", edgecolor="g")

cp = plt.contour(x0, x1, model.distance(x).reshape(100, 100), np.array([-1, 0, 1]), colors="k", linestyles=("dashed", "solid", "dashed"))

plt.clabel(cp, fmt='y=%.f', inline=True, fontsize=15)

plt.xlim(-3, 3)

plt.ylim(-3, 3)

plt.gca().set_aspect("equal", adjustable="box")

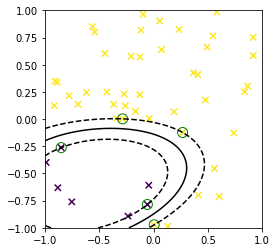

def create_toy_data():

x = np.random.uniform(-1, 1, 100).reshape(-1, 2)

y = x < 0

y = (y[:, 0] * y[:, 1]).astype(np.float)

return x, 1 - 2 * y

x_train, y_train = create_toy_data()

model = SupportVectorClassifier(RBF(np.ones(3)))

model.fit(x_train, y_train)

x0, x1 = np.meshgrid(np.linspace(-1, 1, 100), np.linspace(-1, 1, 100))

x = np.array([x0, x1]).reshape(2, -1).T

plt.scatter(x_train[:, 0], x_train[:, 1], s=40, c=y_train, marker="x")

plt.scatter(model.X[:, 0], model.X[:, 1], s=100, facecolor="none", edgecolor="g")

plt.contour(

x0, x1, model.distance(x).reshape(100, 100),

np.arange(-1, 2), colors="k", linestyles=("dashed", "solid", "dashed"))

plt.xlim(-1, 1)

plt.ylim(-1, 1)

plt.gca().set_aspect("equal", adjustable="box")

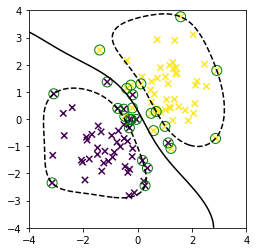

7.1.1 Overlapping class distributions¶

def create_toy_data():

x0 = np.random.normal(size=100).reshape(-1, 2) - 1.

x1 = np.random.normal(size=100).reshape(-1, 2) + 1.

x = np.concatenate([x0, x1])

y = np.concatenate([-np.ones(50), np.ones(50)]).astype(np.int)

return x, y

x_train, y_train = create_toy_data()

model = SupportVectorClassifier(RBF(np.array([1., 0.5, 0.5])), C=1.)

model.fit(x_train, y_train)

x0, x1 = np.meshgrid(np.linspace(-4, 4, 100), np.linspace(-4, 4, 100))

x = np.array([x0, x1]).reshape(2, -1).T

plt.scatter(x_train[:, 0], x_train[:, 1], s=40, c=y_train, marker="x")

plt.scatter(model.X[:, 0], model.X[:, 1], s=100, facecolor="none", edgecolor="g")

plt.contour(x0, x1, model.distance(x).reshape(100, 100), np.arange(-1, 2), colors="k", linestyles=("dashed", "solid", "dashed"))

plt.xlim(-4, 4)

plt.ylim(-4, 4)

plt.gca().set_aspect("equal", adjustable="box")

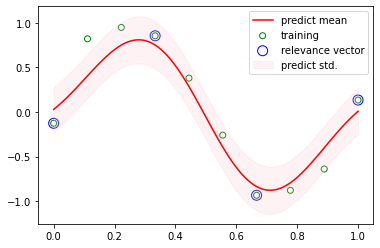

7.2 Relevance Vector Machines¶

7.2.1 RVM for regression¶

def create_toy_data(n=10):

x = np.linspace(0, 1, n)

t = np.sin(2 * np.pi * x) + np.random.normal(scale=0.1, size=n)

return x, t

x_train, y_train = create_toy_data(n=10)

x = np.linspace(0, 1, 100)

model = RelevanceVectorRegressor(RBF(np.array([1., 20.])))

model.fit(x_train, y_train)

y, y_std = model.predict(x)

plt.scatter(x_train, y_train, facecolor="none", edgecolor="g", label="training")

plt.scatter(model.X.ravel(), model.t, s=100, facecolor="none", edgecolor="b", label="relevance vector")

plt.plot(x, y, color="r", label="predict mean")

plt.fill_between(x, y - y_std, y + y_std, color="pink", alpha=0.2, label="predict std.")

plt.legend(loc="best")

plt.show()

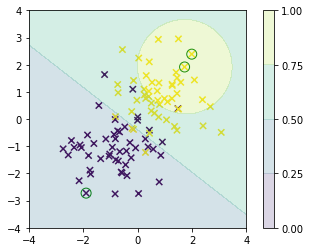

7.2.3 RVM for classification¶

def create_toy_data():

x0 = np.random.normal(size=100).reshape(-1, 2) - 1.

x1 = np.random.normal(size=100).reshape(-1, 2) + 1.

x = np.concatenate([x0, x1])

y = np.concatenate([np.zeros(50), np.ones(50)]).astype(np.int)

return x, y

x_train, y_train = create_toy_data()

model = RelevanceVectorClassifier(RBF(np.array([1., 0.5, 0.5])))

model.fit(x_train, y_train)

x0, x1 = np.meshgrid(np.linspace(-4, 4, 100), np.linspace(-4, 4, 100))

x = np.array([x0, x1]).reshape(2, -1).T

plt.scatter(x_train[:, 0], x_train[:, 1], s=40, c=y_train, marker="x")

plt.scatter(model.X[:, 0], model.X[:, 1], s=100, facecolor="none", edgecolor="g")

plt.contourf(x0, x1, model.predict_proba(x).reshape(100, 100), np.linspace(0, 1, 5), alpha=0.2)

plt.colorbar()

plt.xlim(-4, 4)

plt.ylim(-4, 4)

plt.gca().set_aspect("equal", adjustable="box")