4. Linear Models for Classification¶

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

from prml.preprocess import PolynomialFeature

from prml.linear import (

BayesianLogisticRegression,

LeastSquaresClassifier,

FishersLinearDiscriminant,

LogisticRegression,

Perceptron,

SoftmaxRegression

)

np.random.seed(1234)

def create_toy_data(add_outliers=False, add_class=False):

x0 = np.random.normal(size=50).reshape(-1, 2) - 1

x1 = np.random.normal(size=50).reshape(-1, 2) + 1.

if add_outliers:

x_1 = np.random.normal(size=10).reshape(-1, 2) + np.array([5., 10.])

return np.concatenate([x0, x1, x_1]), np.concatenate([np.zeros(25), np.ones(30)]).astype(np.int)

if add_class:

x2 = np.random.normal(size=50).reshape(-1, 2) + 3.

return np.concatenate([x0, x1, x2]), np.concatenate([np.zeros(25), np.ones(25), 2 + np.zeros(25)]).astype(np.int)

return np.concatenate([x0, x1]), np.concatenate([np.zeros(25), np.ones(25)]).astype(np.int)

4.1 Discriminant Functions¶

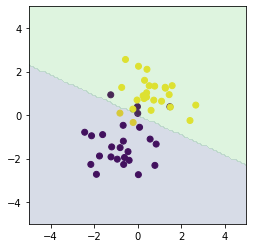

4.1.3 Least squares for classification¶

x_train, y_train = create_toy_data()

x1_test, x2_test = np.meshgrid(np.linspace(-5, 5, 100), np.linspace(-5, 5, 100))

x_test = np.array([x1_test, x2_test]).reshape(2, -1).T

feature = PolynomialFeature(1)

X_train = feature.transform(x_train)

X_test = feature.transform(x_test)

model = LeastSquaresClassifier()

model.fit(X_train, y_train)

y = model.classify(X_test)

plt.scatter(x_train[:, 0], x_train[:, 1], c=y_train)

plt.contourf(x1_test, x2_test, y.reshape(100, 100), alpha=0.2, levels=np.linspace(0, 1, 3))

plt.xlim(-5, 5)

plt.ylim(-5, 5)

plt.gca().set_aspect('equal', adjustable='box')

plt.show()

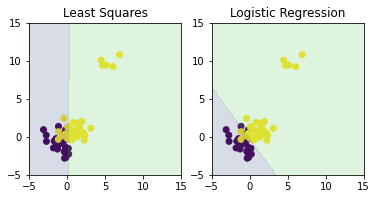

x_train, y_train = create_toy_data(add_outliers=True)

x1_test, x2_test = np.meshgrid(np.linspace(-5, 15, 100), np.linspace(-5, 15, 100))

x_test = np.array([x1_test, x2_test]).reshape(2, -1).T

feature = PolynomialFeature(1)

X_train = feature.transform(x_train)

X_test = feature.transform(x_test)

least_squares = LeastSquaresClassifier()

least_squares.fit(X_train, y_train)

y_ls = least_squares.classify(X_test)

logistic_regression = LogisticRegression()

logistic_regression.fit(X_train, y_train)

y_lr = logistic_regression.classify(X_test)

plt.subplot(1, 2, 1)

plt.scatter(x_train[:, 0], x_train[:, 1], c=y_train)

plt.contourf(x1_test, x2_test, y_ls.reshape(100, 100), alpha=0.2, levels=np.linspace(0, 1, 3))

plt.xlim(-5, 15)

plt.ylim(-5, 15)

plt.gca().set_aspect('equal', adjustable='box')

plt.title("Least Squares")

plt.subplot(1, 2, 2)

plt.scatter(x_train[:, 0], x_train[:, 1], c=y_train)

plt.contourf(x1_test, x2_test, y_lr.reshape(100, 100), alpha=0.2, levels=np.linspace(0, 1, 3))

plt.xlim(-5, 15)

plt.ylim(-5, 15)

plt.gca().set_aspect('equal', adjustable='box')

plt.title("Logistic Regression")

plt.show()

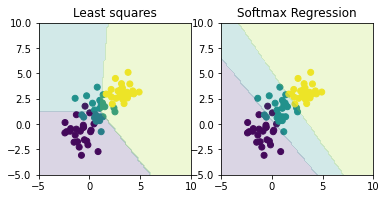

x_train, y_train = create_toy_data(add_class=True)

x1_test, x2_test = np.meshgrid(np.linspace(-5, 10, 100), np.linspace(-5, 10, 100))

x_test = np.array([x1_test, x2_test]).reshape(2, -1).T

feature = PolynomialFeature(1)

X_train = feature.transform(x_train)

X_test = feature.transform(x_test)

least_squares = LeastSquaresClassifier()

least_squares.fit(X_train, y_train)

y_ls = least_squares.classify(X_test)

logistic_regression = SoftmaxRegression()

logistic_regression.fit(X_train, y_train, max_iter=1000, learning_rate=0.01)

y_lr = logistic_regression.classify(X_test)

plt.subplot(1, 2, 1)

plt.scatter(x_train[:, 0], x_train[:, 1], c=y_train)

plt.contourf(x1_test, x2_test, y_ls.reshape(100, 100), alpha=0.2, levels=np.array([0., 0.5, 1.5, 2.]))

plt.xlim(-5, 10)

plt.ylim(-5, 10)

plt.gca().set_aspect('equal', adjustable='box')

plt.title("Least squares")

plt.subplot(1, 2, 2)

plt.scatter(x_train[:, 0], x_train[:, 1], c=y_train)

plt.contourf(x1_test, x2_test, y_lr.reshape(100, 100), alpha=0.2, levels=np.array([0., 0.5, 1.5, 2.]))

plt.xlim(-5, 10)

plt.ylim(-5, 10)

plt.gca().set_aspect('equal', adjustable='box')

plt.title("Softmax Regression")

plt.show()

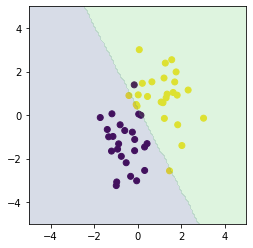

4.1.4 Fisher’s linear discriminant¶

x_train, y_train = create_toy_data()

x1_test, x2_test = np.meshgrid(np.linspace(-5, 5, 100), np.linspace(-5, 5, 100))

x_test = np.array([x1_test, x2_test]).reshape(2, -1).T

model = FishersLinearDiscriminant()

model.fit(x_train, y_train)

y = model.classify(x_test)

plt.scatter(x_train[:, 0], x_train[:, 1], c=y_train)

plt.contourf(x1_test, x2_test, y.reshape(100, 100), alpha=0.2, levels=np.linspace(0, 1, 3))

plt.xlim(-5, 5)

plt.ylim(-5, 5)

plt.gca().set_aspect('equal', adjustable='box')

plt.show()

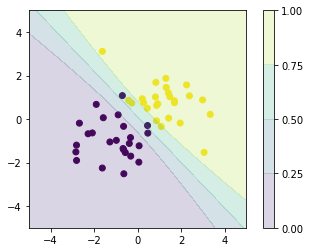

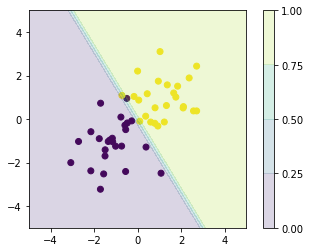

4.3 Probabilistic Discriminative Models¶

4.3.2 Logistic Regression¶

x_train, y_train = create_toy_data()

x1_test, x2_test = np.meshgrid(np.linspace(-5, 5, 100), np.linspace(-5, 5, 100))

x_test = np.array([x1_test, x2_test]).reshape(2, -1).T

feature = PolynomialFeature(degree=1)

X_train = feature.transform(x_train)

X_test = feature.transform(x_test)

model = LogisticRegression()

model.fit(X_train, y_train)

y = model.proba(X_test)

plt.scatter(x_train[:, 0], x_train[:, 1], c=y_train)

plt.contourf(x1_test, x2_test, y.reshape(100, 100), np.linspace(0, 1, 5), alpha=0.2)

plt.colorbar()

plt.xlim(-5, 5)

plt.ylim(-5, 5)

plt.gca().set_aspect('equal', adjustable='box')

plt.show()

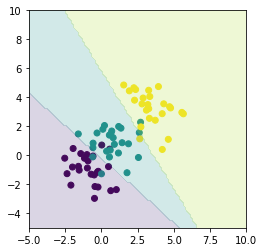

4.3.4 Multiclass logistic regression¶

x_train, y_train = create_toy_data(add_class=True)

x1, x2 = np.meshgrid(np.linspace(-5, 10, 100), np.linspace(-5, 10, 100))

x = np.array([x1, x2]).reshape(2, -1).T

feature = PolynomialFeature(1)

X_train = feature.transform(x_train)

X = feature.transform(x)

model = SoftmaxRegression()

model.fit(X_train, y_train, max_iter=1000, learning_rate=0.01)

y = model.classify(X)

plt.scatter(x_train[:, 0], x_train[:, 1], c=y_train)

plt.contourf(x1, x2, y.reshape(100, 100), alpha=0.2, levels=np.array([0., 0.5, 1.5, 2.]))

plt.xlim(-5, 10)

plt.ylim(-5, 10)

plt.gca().set_aspect('equal', adjustable='box')

plt.show()

4.5 Bayesian Logistic Regression¶

x_train, y_train = create_toy_data()

x1_test, x2_test = np.meshgrid(np.linspace(-5, 5, 100), np.linspace(-5, 5, 100))

x_test = np.array([x1_test, x2_test]).reshape(2, -1).T

feature = PolynomialFeature(degree=1)

X_train = feature.transform(x_train)

X_test = feature.transform(x_test)

model = BayesianLogisticRegression(alpha=1.)

model.fit(X_train, y_train, max_iter=1000)

y = model.proba(X_test)

plt.scatter(x_train[:, 0], x_train[:, 1], c=y_train)

plt.contourf(x1_test, x2_test, y.reshape(100, 100), np.linspace(0, 1, 5), alpha=0.2)

plt.colorbar()

plt.xlim(-5, 5)

plt.ylim(-5, 5)

plt.gca().set_aspect('equal', adjustable='box')

plt.show()